MIOpen is AMD’s counter-part of NVIDIA cuDNN. In this article, we’ll go through the implementation details of MIOpen for some typical APIs.

Solution finding for a given problem

Solution finding is the inevitable step to run the MIOpen routine.

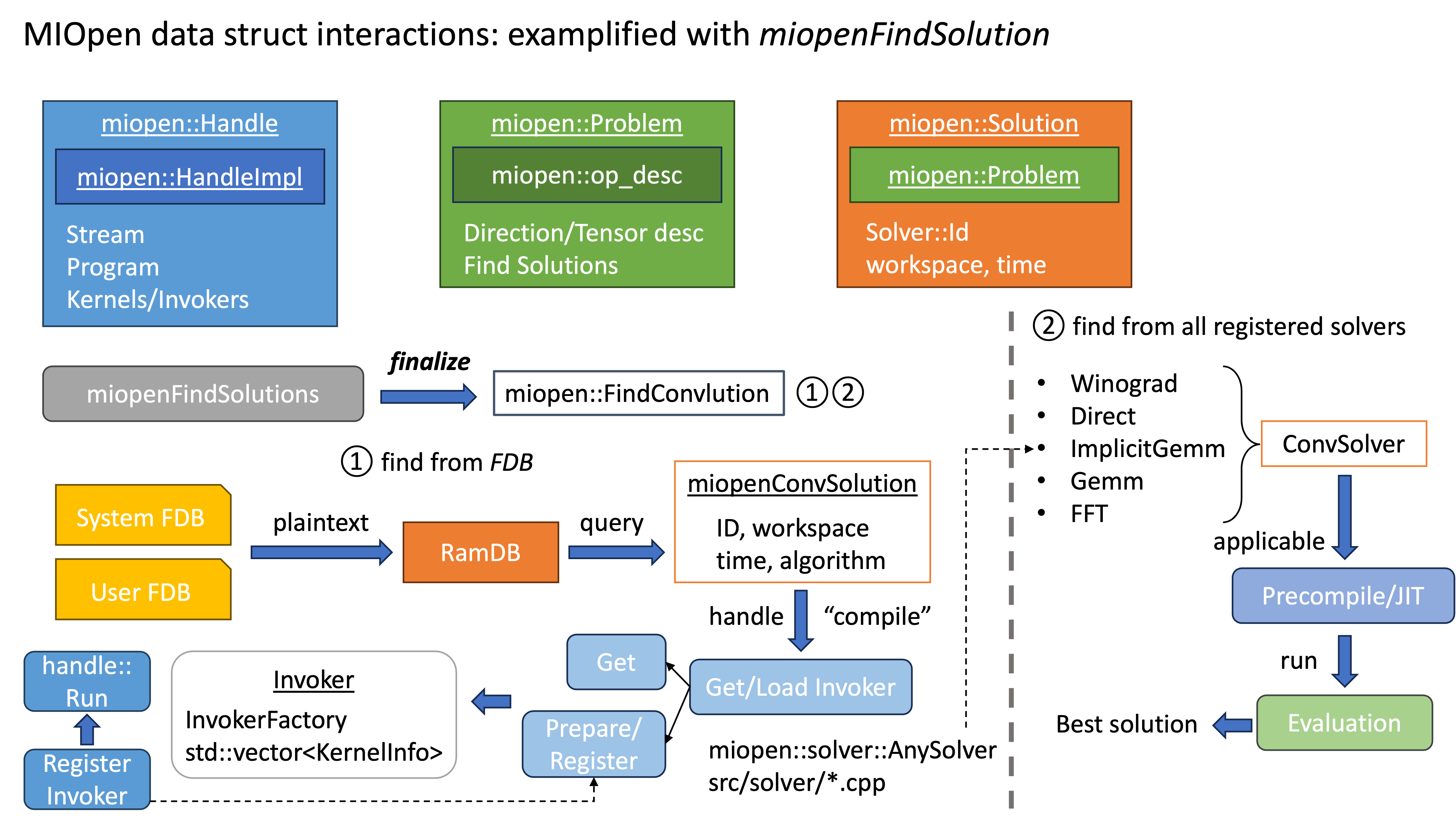

The diagram above demonstrates the underlying process when user calls miopenFindSolution.

We’ll show MIOpen basic conceptions and procedures based on this.

MIOpen users will call miopenFindSolutions to query the solutions sorted by either kernel duration or workspace size.

Inside MIOpen, how does MIOpen describe a problem, and how does it fetch and determine which solutions are “applicable” for a given problem?

Problem description

namespace miopen {

namespace solver {

enum class Primitive

{

Invalid,

Convolution,

Activation,

Batchnorm,

Bias,

Fusion,

Pooling,

};

}}There are different problem types (called primitive) supported in MIOpen.

Currently, MIOpen only support convolution to find the solution (other operators have their dedicated APIs, e.g., pooling calls the kernel directly with miopenPoolingForward).

MIOpen uses the abstract type miopen::Problem to store problem description, which is convertible to miopen::conv::ProblemDescription.

There contains the convolution parameters (direction, strides, paddings, dilations) used to determine the solutions (and the kernels) which are capable of solving this problem.

Solver and its source

The solution finding routine finally locates at one of the member functions in miopen::ConvolutionDescriptor, based of the problem type and direction:

FindConvFwdAlgorithmfor the activation, if forward and not transposed, or backward and transposedFindConvBwdDataAlgorithmfor the backward gradient, if backward not transposed, or forward and transposedFindConvBwdWeightsAlgorithmfor the weight updating gradient, if backward and the problem is for weight updating

In MIOpen, there are two approaches to evaluate which solution is most suitable (e.g., fastest, or requires minimal workspace size) for a given problem:

- from FindDB, which records all possible kernels being able to solve a specific problem, and their duration

- list all possible kernels, and run on the real hardware to measure their performance (i.e., pre-run)

In the aforementioned three FindConv* functions, MIOpen will first try to load the optimal solution from the FindDB, based on the queried records.

Then the library is attempting to “compile” the solution, if it’s not in the maintained invoker cache.

The compile procedure comprises of 3 steps:

- load the “program” (load ELF

.soshared library viahipModuleLoad), which is the most time-consuming - parse the function from the program (get the kernel object from HIP module via

hipModuleGetFunction) - register the invoker, i.e., put it into the invoker cache maintained by MIOpen, which is indexed by pair

(network_config, solver_id)or(network_config, algorithm), where the network config is the collection of operator parameters (strides, paddings, etc.)

If no record can be found in FindDB, MIOpen falls back to registered solutions which are linked against to the libMIOpen.so dynamic library during building.

MIOpen kernels are implemented based on some abstract design algorithms, such as the convolution operator can be materialized via:

DirectWinogradImplicitGemmFFT

A solver is a series of pre-defined kernels to implement one of these algorithms to cope with the given problem. Once MIOpen collects all applicable solvers for a problem, it will firstly compile the kernel codes (if any, haven’t been compiled during build time). And then run them on the target GPU to evaluate which one is optimal.

Invoker and InvokerFactory

Each src/solver/*.cpp defines a Solver struct which should return a ConvSolution in its member function GetSolution.

It specifies the kernel source file (located in src/kernels/*), macros and JIT compile options determined by the problem, as well as other options like tunable settings.

namespace miopen {

using Invoker = std::function<void(const Handle&, const AnyInvokeParams& primitive_parameters)>;

using InvokerFactory = std::function<Invoker(const std::vector<Kernel>&)>;

}Invoker is an invokable functor to substantially start the kernel, and InvokerFactory is the prepare step to specify their calling order, make sure the parameter format, etc.

Each solver constructs its own InvokerFactory.

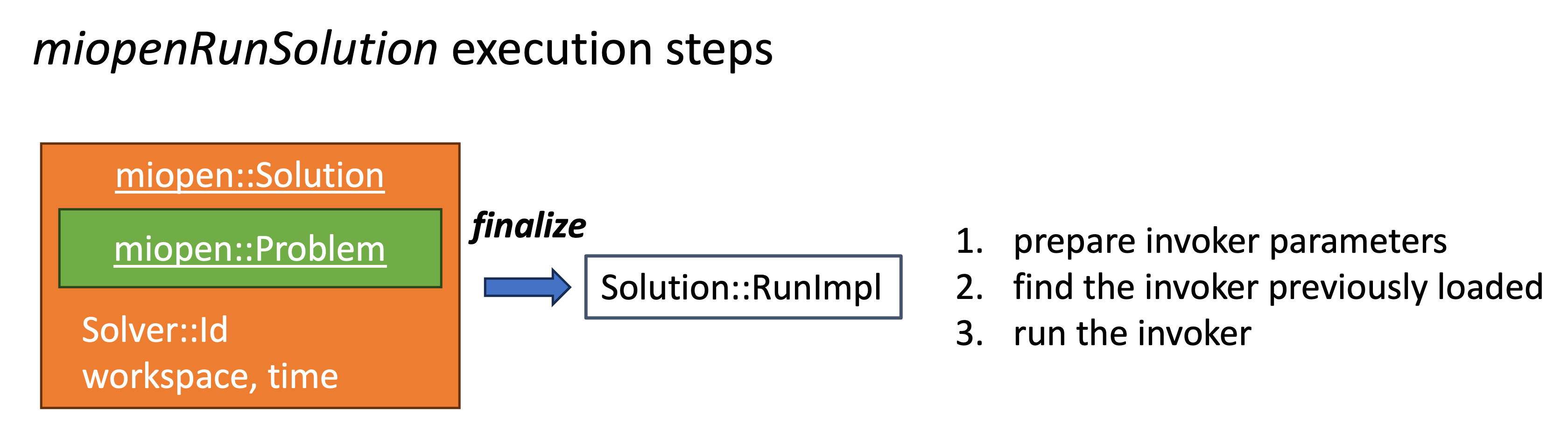

miopenRunSolution

After finding the optimal solution, MIOpen users will call miopenRunSolution to invoke the corresponding kernels.

If the invoker has been cached with handle previously (during the finding procedure), MIOpen can directly call the kernel.

Otherwise it has to repeat the aforementioned steps (load and then register).

More than convolution: other operations

As a DL primitive library, MIOpen also offers operators beyond convolution: pooling, activation, layer normalization, etc. Nevertheless, these types of operations do not require MIOpen to invoke the solution finding routine to determine the “optimal” solution in advance. Next we’ll try to demystify this doubt.

Todo

TODO…